Loans Default Prediction Using Logistic Regression and Random Forest

There are a large number of loan applications that must be reviewed to determine whether applicants are qualified for a vehicle loan. Manually screening this high volume of applications is time-consuming and inefficient, which leads to delayed responses, customer dissatisfaction, and, ultimately, the loss of potential clients. In addition, this manual process consumes significant company resources, including employee time and effort

Project Objectives

reducing company’s wasted resources [Time, …] and increasing customer satisfaction by reducing approval waiting time for each loan application using predictive machine learning algorithms [Logistic Regression and Random Forest] are applied to develop model that can accurately classify borrowers as likely to default or not.

Methodology

Date Source

Data Overview

The dataset contains 233,154 loan applications with 41 features. These features capture customer information like demographics and employment type, loan and asset details such as disbursed amount, asset cost, and branch, document verification flags and historical loan behavior. The target variable, LOAN_DEFAULT, indicates whether the customer defaulted or not.

Data Preprocessing

To ensure data quality and model performance, the following preprocessing steps were applied:

-

Handling Missing Values – Missing values were removed or imputed based on the feature type.

-

Converting some data types (Type casting)

-

Data Splitting – The dataset was split into training and testing sets with a ratio of 80:20 to evaluate model performance.

- Using SMOTE technique to handle class imbalance issue

Feature Engineering

Additional features were created to enhance predictive power, such as:

-

Debt-to-income ratio: calculated by dividing total debt by monthly income.

-

Loan-to-value ratio: calculated as the loan amount divided by the vehicle value.

- Calculating Age of each applicant

Encoding Categorical Variables

- Categorical features such as employment status were encoded using one-hot encoding.

Feature Scaling

- Numerical features were standardized to improve model convergence.

- selecting the most 9 factors considered have direct effect on the loan default as predictors to make the model simpler

Modeling Techniques

Two machine learning algorithms were implemented:

-

Logistic Regression: A statistical model that predicts the probability of default using a linear combination of input features.

-

Random Forest: An ensemble method using multiple decision trees to improve classification accuracy and reduce overfitting.

Model Evaluation

The models were evaluated using the following metrics:

-

Accuracy: Overall correctness of predictions

-

Precision: Correct positive predictions over total predicted positives

-

Recall: Correct positive predictions over actual positives

-

F1 Score: Harmonic mean of precision and recall

-

Confusion matrices were also analyzed to understand the types of misclassifications.

Tools and Technologies

The project was implemented using the following tools:

-

Python – programming language

-

Pandas, NumPy – data manipulation and analysis

-

Scikit-Learn – machine learning algorithms and evaluation

-

Google Colab– development and visualization environment

Results and Discussion

Model Performance:

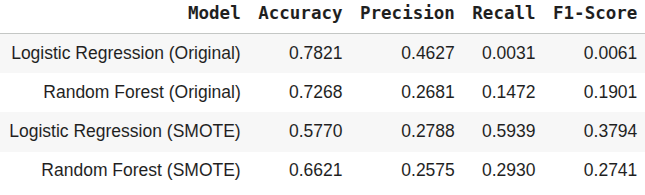

On the original imbalanced data, both models achieved high accuracy but extremely low recall, meaning they failed to identify defaulters. After applying SMOTE, recall improved significantly, especially for Logistic Regression, although accuracy decreased. This trade-off is expected and acceptable in credit risk problems, where identifying risky customers is more important than overall accuracy.

Analysis:

For Logistic Regression (Before class Balancing)

-

The model predicts almost everyone as non-default

-

It misses ~99.7% of defaulters

-

High accuracy is misleading

-

Better than logistic regression

-

Still biased toward majority class

-

Catches ~15% of defaulters

-After Fixing imbalance (SMOTE)

-

Recall jumped from 0.3% → 59%

-

Accuracy dropped

-

Model now identifies most defaulters

[This is a good credit-risk model behavior]

Missing defaulters is worse than flagging safe customers.

For Random Forest (SMOTE)

-

Some recall improvement

-

Precision still low

-

Possibly mild overfitting or conservative splits

[Model may Needs hyperparameter tuning or threshold adjustment]

Conclusion

Logistic Regression (SMOTE) is currently best

-

Highest recall

-

Best F1-score

-

Interpretable